In the last three weeks, I've sent more messages to Claude than to any human being. Not the chatbot. The coding agent.

I've been running these models 24/7. Multiple instances. While doing dishes, going for walks, sleeping. They're constantly working: planning features, writing code, reviewing changes, opening PRs, writing tests, building CI/CD pipelines.

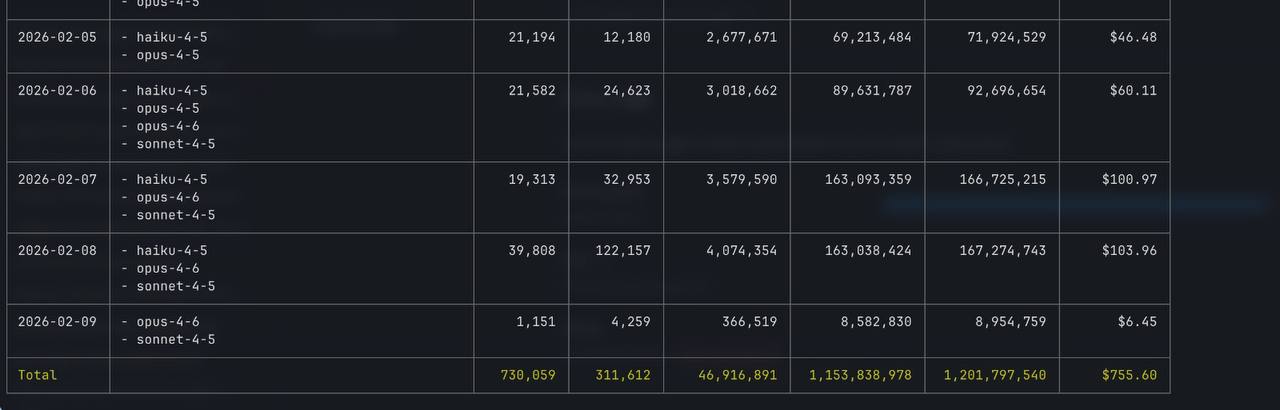

1.2 billion tokens.

1.2 billion tokens.

It's February 2026. Things might change in the coming months. But here's what I've learned about making these things actually work.

They Need Strong Verification Loops

Here's what nobody tells you: these models are incredibly capable when you build the right system around them.

They're reward-hungry. They want to achieve goals. And if you give them strong feedback loops, they will. The trick is building those loops.

I've found two types of verification that work:

Hard verification - deterministic feedback they can't argue with:

- Tests that pass or fail

- Code that compiles or doesn't

- Type checking that's binary

Soft verification - self-review against guidelines:

- Code review checklists

- Style guide compliance

- Architecture pattern adherence

When you combine both? You get surprisingly good output. The models self-correct against soft rules, then prove it with hard tests.

Build Team Standards (This Matters More Than You Think)

If you're working in a team, this becomes critical: standardize how everyone uses these agents.

Here's what worked for me:

Create guidance files in your repo:

CLAUDE.MDorAGENT.MDwith coding guidelines- Architecture decisions and patterns

- Code review standards

- Testing requirements

Make sure every team member uses them. Every coding agent too.

Why this matters: when humans and agents follow the same standards, code quality converges. The agent-written code starts looking like human-written code. Reviews become easier. Onboarding becomes faster.

Without this? You get fragmented codebases where every developer (human or AI) has their own style. Good luck maintaining that.

My Actual Setup

Here's what I'm running:

Custom Skills - Team-specific skills we defined and follow

MCPs:

- Jira MCP - task tracking, issue management

- Chrome DevTools MCP - browser automation and testing

GitHub CLI - Configured gh so agents can handle the full PR workflow and get feedback from CI runs

Video Editing Tools:

- ffmpeg for video editing

- Extract audio from videos

- Add captions automatically

- Create video demos

Claude uses these CLI tools to do a lot of video editing work automatically.

Visual Verification:

- Playwright for video capture

- Automated screenshot/video demos

- After completing a feature, Claude records a video demo showing it works

This last one is surprisingly powerful. Visual verification catches things tests miss. The agent finishes a feature, records how it works, and I can see immediately if it actually does what it's supposed to.

Addition vs Maintenance

These models are excellent at writing new code. Give them a spec, they'll implement it. Add tests to the loop, they'll make them pass.

But maintaining existing codebases? That's where things break down without proper verification.

I learned this the hard way: if your codebase doesn't have tests, integration checks, and clear contracts, using coding agents will eventually break things. The code looks fine. It compiles. But subtle bugs slip through.

The solution: Build the verification infrastructure first. Then let the agents work within it.

The Debug Problem

Here's where they still struggle: debugging.

They analyze the problem. Build a plan. The plan looks great. Then during implementation, they miss things. Small things. And somehow, those small things slip past me too.

I haven't figured out how to break this pattern yet. The 99% ceiling is real. You can't just set these things loose and expect perfection.

You're still the supervisor. You still need to check. But with good verification loops, you're checking against test failures and type errors, not guessing if the logic is right.

What Actually Works

After three weeks of constant usage, here's the system:

- Build custom systems - MCPs, skill files, custom verification loops

- Standardize across the team - shared CLAUDE.MD/AGENT.MD in repos

- Automate the pipeline - planning → implementation → review → PR → tests → CI

- Write tests with code - every feature comes with its verification

- Add visual verification - screenshots, videos, browser automation

- Use hard + soft verification - combine deterministic checks with guideline reviews

- Connect to your tools - GitHub CLI, Jira, browser control, video editing (ffmpeg)

These aren't perfect tools. They need structure, guardrails, and supervision. But when you build that structure? They can do a surprising amount of useful work.

The skill isn't just prompting anymore. It's building the system that lets them succeed.